Fine-Tune Llama 2 Models with Ray and DeepSpeed

In this repo, you will find an example demonstrating how to fine-tune LLMs with Ray on OpenShift AI, using HF Transformers, Accelerate, PEFT (LoRA), and DeepSpeed, for Llama 2 models.

It adapts the Fine-tuning Llama-2 series models with Deepspeed, Accelerate, and Ray Train TorchTrainer1 example from the Ray project, so it runs using the Distributed Workloads stack, on OpenShift AI.

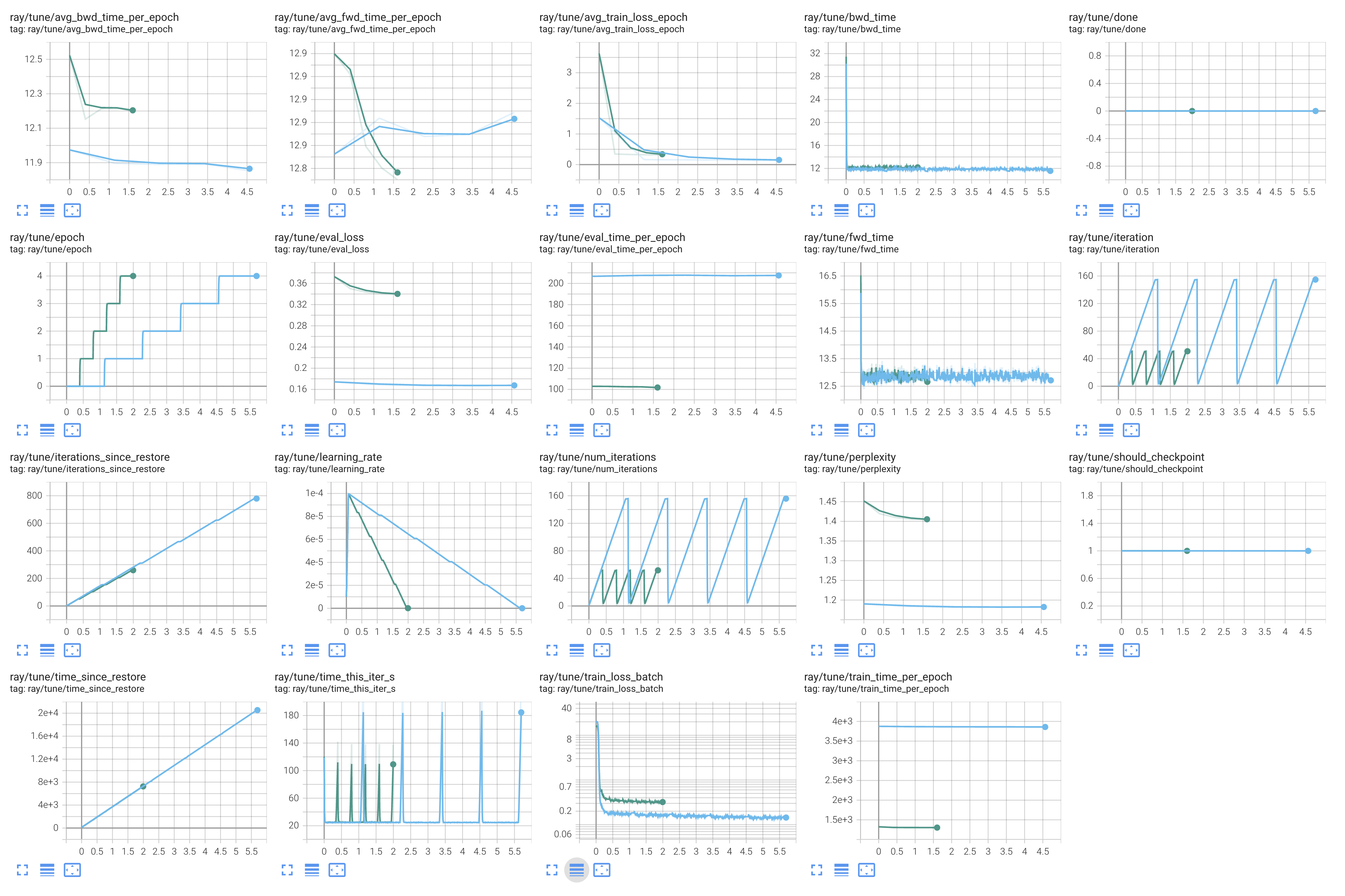

Overview of the Ray Dashboard during the fine-tuning:

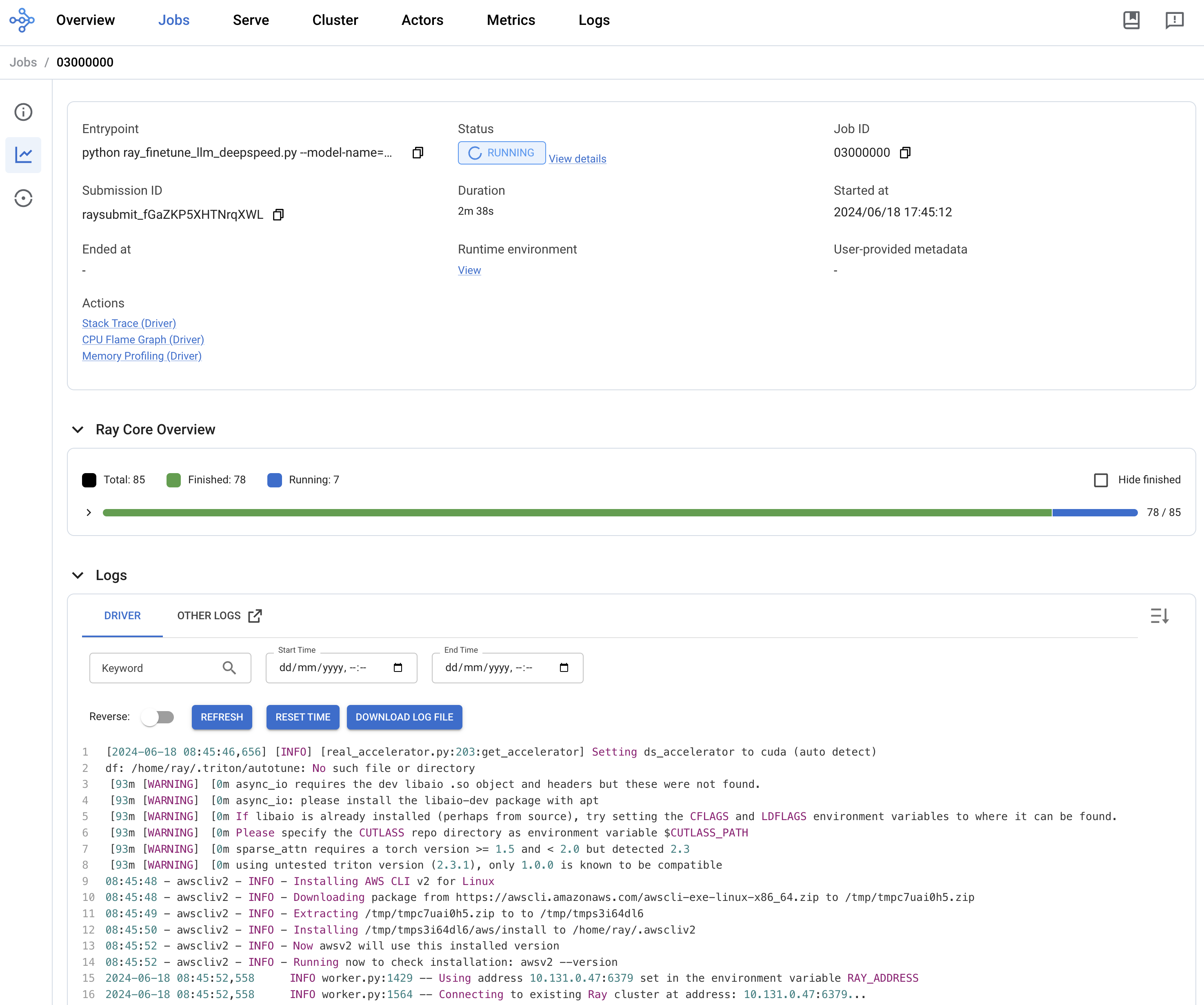

Example of a visualization comparing experiments with different combinations of context length and batch size: